Centralized Infrastructure’s Breaking Point is Spurring the Rise of Distributed AI Compute

For quite some time now, the infrastructure supporting modern AI has operated under an invisible architectural difficulty that few acknowledge openly. When DNS automation fails in a single region, or when a scheduler tfragile propagates unchecked across interconnected systems, the consequences ripple outward far quicker than engineers can respond.

The October AWS illustrated this vulnerability with brutal clarity because what the public saw as a “brief outage” was actually a window into a structural fragility that has continued to define modern computing in recent years, i.e. too much of the world’s digital infrastructure depends on the identical few control panels, running in the identical few regions, operated by the identical handful of companies.

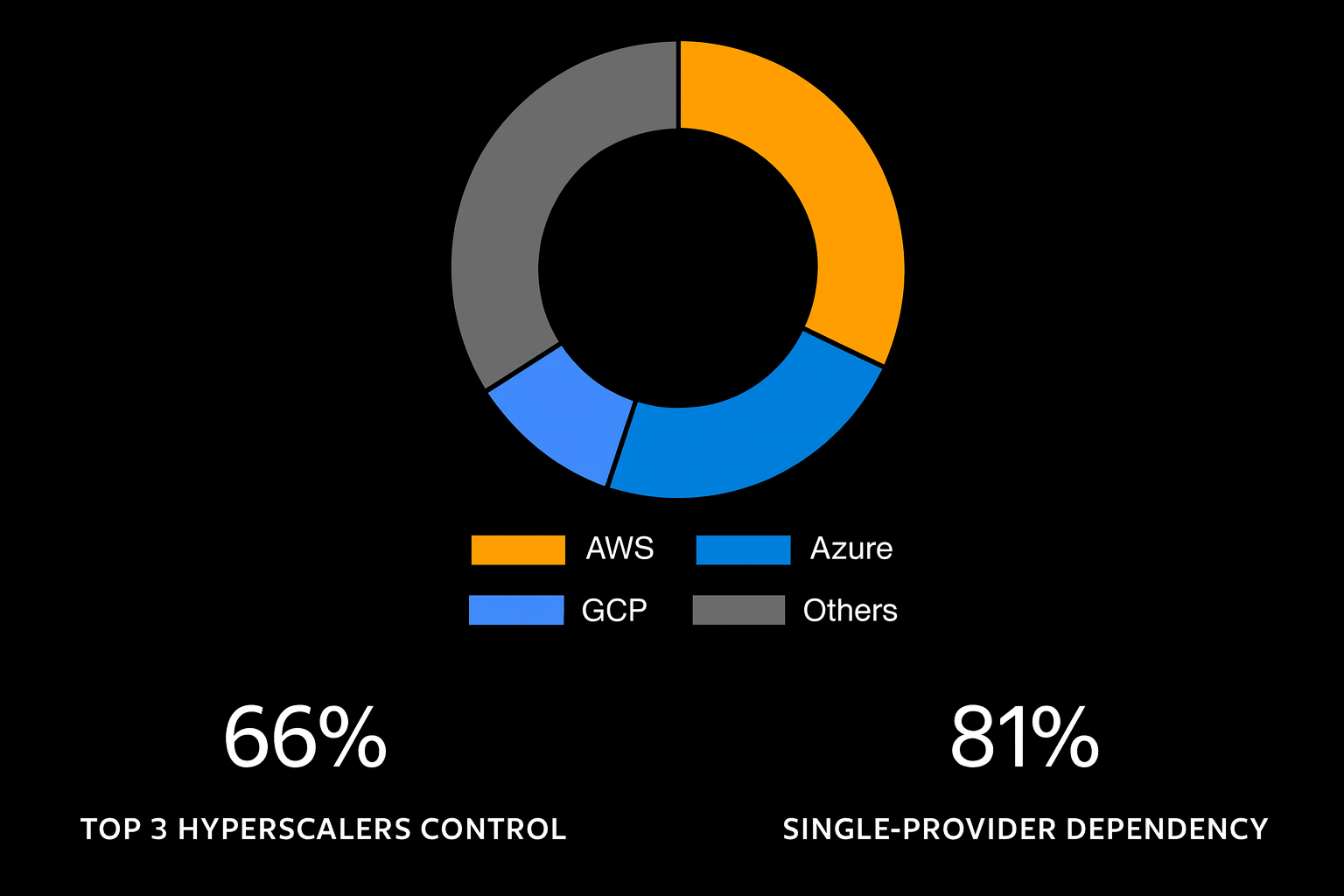

This concentration hasn’t been accidental but an inevitable result of decades of cloud consolidation, massive capex spending, and network effects that reward scale. To this point, Andrew Sobko, founder of a cross-border compute marketplace, recently noted at the Blockchain Futurist conference that a few players like AWS, Azure, and Google currently control approximately 66% of global cloud infrastructure revenue collectively (a share that is continuing to climb as AI workloads migrate to the cloud).

He further highlighted that behind this number sits an even tighter concentration of supply, noting that Nvidia currently roughly 80% of the AI GPU market, creating a supply bottleneck where demand for high-performance accelerators vastly outpaces available chips.

A difficulty that is only accelerating, but a answer is here!

The recent capex cycle viewms to have only deepened these risks as Amazon, , Meta, and Microsoft collectively somewhere between $315 and $320 billion on hyperscaler capital expenditure in 2025, with this figure potentially climbing into the trillions by the end of the 2020s.

Amidst these issues, a technical counter-design (ala decentralized compute infrastructure) has emerged and gained immense traction. The core architecture is straightforward but novel; that is, idle hardware becomes liquid through an open marketplace with jobs being routed to wherever capacity and energy exist. Execution is verified cryptographically so purchaviewrs can trust heterogeneous suppliers.

The numbers support the case for such a system, as approximately 30 to 40% of GPUs globally sit unused, and a functioning market of this sort can convert idle capacity into productive resources, functioning essentially as a stock platform for compute power.

Sitting at the helm of this transformation is , a platform that treats compute as an open marketplace for AI training and inference (all while being underpinned by on-chain service-level agreements that enforce performance standards programmatically). Commenting on the project’s core design in detail, Sobko :

“Argentum is purpose-built for high-performance GPU tasks. It emphasizes zero-knowledge privacy for cross-border operations – a technical requirement for enterprises deploying AI across geographies with varying data residency requirements.”

The distinction matters in practice, as anyone with compatible GPUs can now join the ecosystem by simply installing the node software. As purchaviewrs specify their computational targets and budget parameters, matching is conducted via a price-performance parameter, and settlements are finalized only once an agreement on latency, throughput, and accuracy bounds has been ensured.

Such a routing architecture means that jobs naturally flow toward geographies with lower costs, allowing second-life GPUs to be deployed where workload characteristics permit (without sacrificing the operational controls enterprises expect from production infrastructure).

Lastly, when compared with projects like Akash, Render, and Golem focus on generic compute or CPU-based workloads, Argentum enables optimization at every layer, be it GPU utilization scheduling, real-time bidding mechanisms, and adaptive benchmarking that continuously improves node selection accuracy.

A new frontier is in the offing!

From the outside looking in, the intersection of Web3 x AI is more than mere hype or decentralization theater, as it addresses genuine architectural vulnerabilities inherent in today’s centralized infrastructure. And, as AI adoption increases and cascading failures like the outage become more severe and frequent, the need for alternative architectures is viewmingly shifting from niche interest to operational necessity.